Moving from Contabo to Hetzner

I made another article about how I moved from Cuntabo to Webdock and why I am moving from this awful Cuntabo hosting company. So read it here because I will not go into the details of how I did it. But basically I am using Yunohost and it makes it so easy to manage a lot of services and transfer them to a new server. Love it!

The challenge: 1.4TB of data and some 15 services.

The previous move was about 100GB in size. Now more than 10 times the size. I would have to find a hosting that provides a lot of storage space, they are decent, and I have full root control over the server. Very hard to find. And Webdock would not do it. They provide 500GB of diskspace max, so that was out of the question.

Why Hetzner?

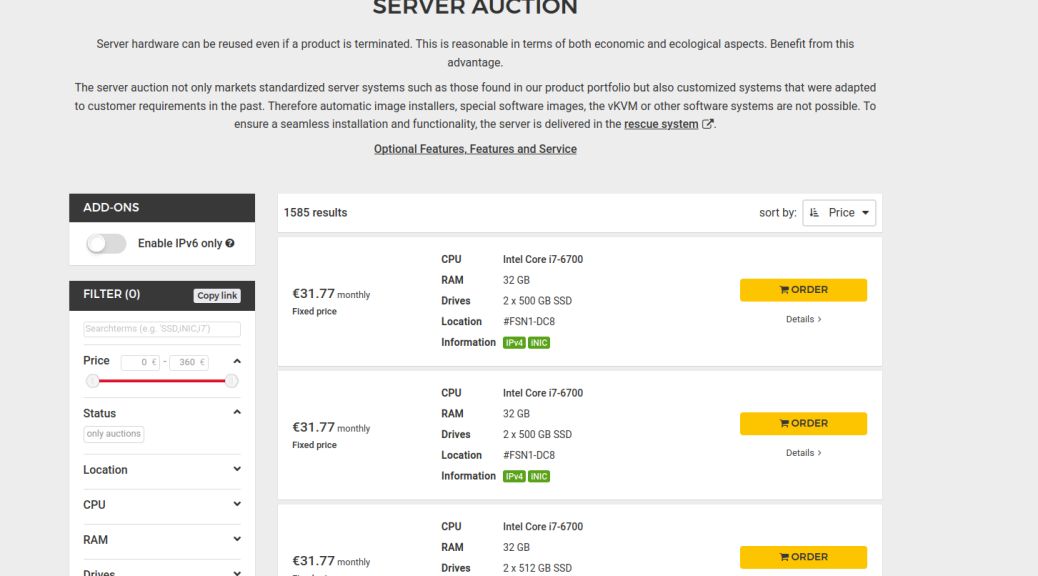

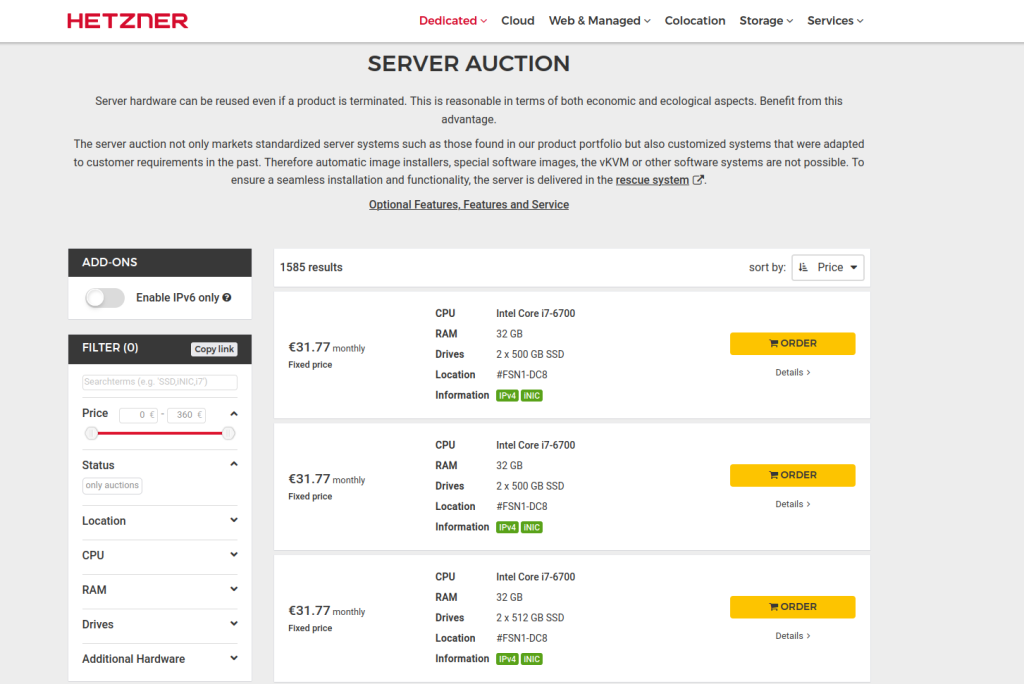

They seem very popular in Europe and used among the people who provide similar services to ours, so the feedback from these people is decent. Plus they have these auctioned servers that are basically computers, older, but still good, that you can basically rent. And they are cheap. Plus they have a huge variety of them so it was not that hard to find one that had a lot of storage space.

2 x 1.92TB SSD SATA is almost 4TB of SSD storage. 32GB of RAM and Intel i7-6700 CPU @ 3.40GHz, 8 cores.

That’s the one I bought. For only 45 Euros a month. On Cuntabo I paid 38 Euros for 2TB of storage, 64 GB of RAM (too much for our needs anyway) and a 10 virtual core AMD (the one we have now is more powerful and the hardware is only for us, dedicated). Plus I had to pay 4 more Euros a month with Cuntabo to get a new IP because the old one had a terrible reputation because we hosted all sorts of services like Invidious, Searx, and the like, and google and the rich-crew started to ban our IP and thus all of our services were affected.

Anyway, with Cuntabo I was paying 42 Euros a month for 2TB of SSD storage, and now I pay 45 Euros a month for almost 4TB of SSD storage and a better overall server. Not bad!

You see we were running out of disk space and we had to move to a new server or use an object storage, so I was ready to pay some 10-20 more euros a month for a 1-2 TB of extra storage. Now for just 3 more Euros a month I get that!

I also feel good using older hardware so it does not go to waste 😉

How to manage a dedicated server?

A dedicated server is just a computer somewhere else. It has no management besides some basic panel that allows you to change a few things and restart the computer. Therefore no snapshots….but do I even need them?

Snapshots – bad??

I mentioned before that I NEED to be able to make server snapshots and that I am obsessed with backups. BUT I started to realize that my practice was not good.

There are a few situations that stress me out in terms of server management and the services that we provide:

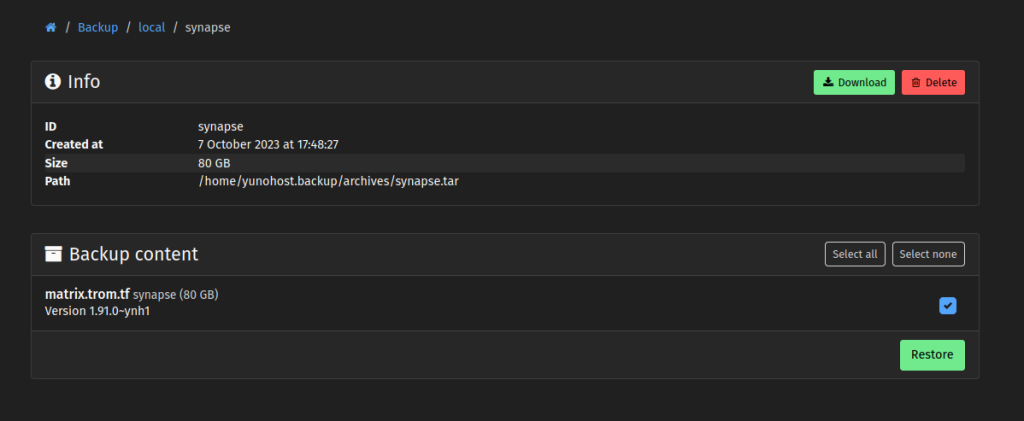

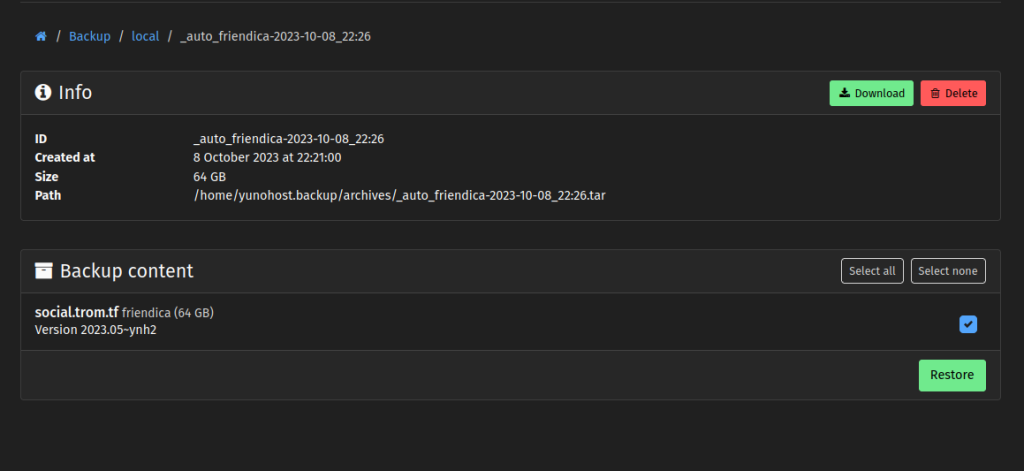

1. When I update either Friendica or Synapse (Matrix).

Both have massive databases and to backup these automatically before doing the updates via YNH, can take between 30 minutes to an hour and needs to be done via the terminal else the YNH panel will show you a timeout error because of Nginx probably.

Then if the update fails, the restoration takes another hour or two for each. Meaning these services can be unavailable for a few hours if there is a failed update. And Synapse gets updates quite often. So I am always stressed when I have to update these. And these two services failed to update a bunch of times over the past years…. Snapshots saved my ass…

2. When I do kernel/core system updates or migrate to a new Debian version.

These sort of updates should be super stable. It is Debian after all. But what if the shit hits the fan one day!?

3. When YNH upgrades to a new major version.

Same….so far so stable, but what if shit hits again the fan?

4. When I do custom stuff to the system or make some mistake like delete some files…

These can happen considering that at times I do some custom changes for our services, but unlikely for me to do custom stuff to the system itself. Still…

So how?

Before, my dirty solution was to take a snapshot of the entire server before I do any of the above. If shit hits the fan I restore in a few seconds. Of course that’s not a good practice. Imagine I update Synapse and even without a backup before update, it can take 15-20 minutes to update. So I take a snapshot before I start the update. The update fails and Synapse is broken – it takes me 10-20 minutes to realize or try to fix it. 30-40 minutes have passed and now I click the restore snapshot-panic button!

I have restored Synapse ALONGSIDE everything else how it was 40 minutes ago. If anyone made posts on Friendica, synced files via Nextcloud and so forth, are ALL LOST. BAD!

You can see why this is a very very BAD practice and I’ve done this a dozen of times for the past years, simply because the alternative was kinda bothering me….Like I said it would take me 1-2 hours to restore Synapse from a backup. And frankly I never tried to restore these properly because a few times when I tried via the YNH panel they failed.

So how to manage it all?

First, Yunohost is quite great at the way it manages updates. It forces a backup of the service and only after it does the backup, it will update it. Now that I have a lot of experience restoring stuff from backups I realized that it is not too scary to do it via SSH (command line) for massive services like Synapse or Friendica. So we are covered there. If any of them fails, restore them via the command line even if it takes an hour or so.

As for the rest….it is unlikely that the Debian or YNH updates will go bad, but just in case I installed and setup Timeshift. With it I scheduled hourly and daily backups. Keep 6 hourly and 3 daily. It uses RSYNC to basically create backups of the entire system, on the same hardware. I chose to exclude the Home folder where the YNH data is stored (peertube videos, nextcloud files, etc..) – makes no sense to backup TB of that data when that data is already backedup on Borgbase.com.

So yah now I have system backups in case shit truly hits the fan.

Therefore with YNH + Borg + Timeshift you can have a safe server even if you go for a dedicated server.

We’ve looked into Proxmox too and it looks very promising. With it you can have a proper panel and control over your dedicated server. Snapshots, backups, etc.. Roma, the wonderful human, helped with testing Proxmox. However since this is something new and on top of the entire YNH install, we need more time to test and see if we can use it. For now I decided to go ahead without server snapshots for all of the reasons mentioned, and Proxmox is mostly useful for these cases where you can make snapshots in seconds.

So how to move big YNH backups?

My previous Webdock experience gave me a lot of experience about how to move YNH from one server to another. I took many notes and now I was ready. So everything was very smooth, but moving big backups is a bit different. Here’s how:

Our Peertube backup (archive) is 800GB in size. It is already backedup on Borgbase.com. So I connect the new server to my Borg repo, list the archives and then download the backup WITHOUT the data:

app=borg; BORG_PASSPHRASE="$(yunohost app setting $app passphrase)" BORG_RSH="ssh -i /root/.ssh/id_${app}_ed25519 -oStrictHostKeyChecking=yes " borg export-tar -e apps/peertube/backup/home/yunohost.app "$(yunohost app setting $app repository)::_auto_peertube-2023-10-08_22:26" /home/yunohost.backup/archives/_auto_peertube-2023-10-08_22:26.tarReplace _auto_friendica-2023-10-08_22:26 with the name of your archive and where you see “peertube” in the code, replace with the name of your app. For example my Peertube backup without the files is around 2GB in size. So tiny.

Then extract the data. Go to the folder where the data is stored: cd /home/yunohost.app/ Then extract the data with:

app=borg; BORG_PASSPHRASE="$(yunohost app setting $app passphrase)" BORG_RSH="ssh -i /root/.ssh/id_${app}_ed25519 -oStrictHostKeyChecking=yes " borg extract "$(yunohost app setting $app repository)::_auto_peertube-2023-10-08_22:26" apps/peertube/backup/home/yunohost.app/

mv apps/peertube/backup/home/yunohost.app/peertube ./

rm -r appsAgain replace the _auto_peertube-2023-10-08_22:26 with the full name of your archive and “peertube” with the name of your app.

Took around 5 hours to download all of the Peertube data.

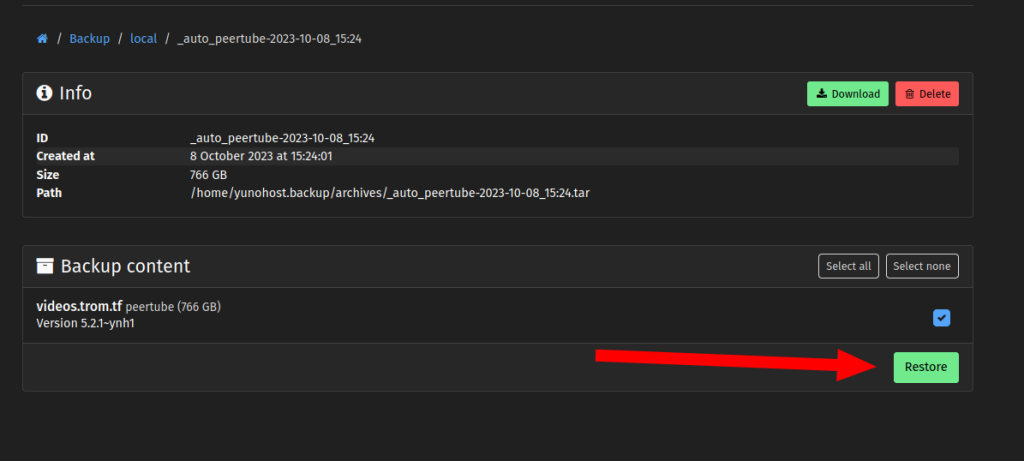

After that is done go to your YNH Backup panel to restore the archive as normal. Click it and restore!

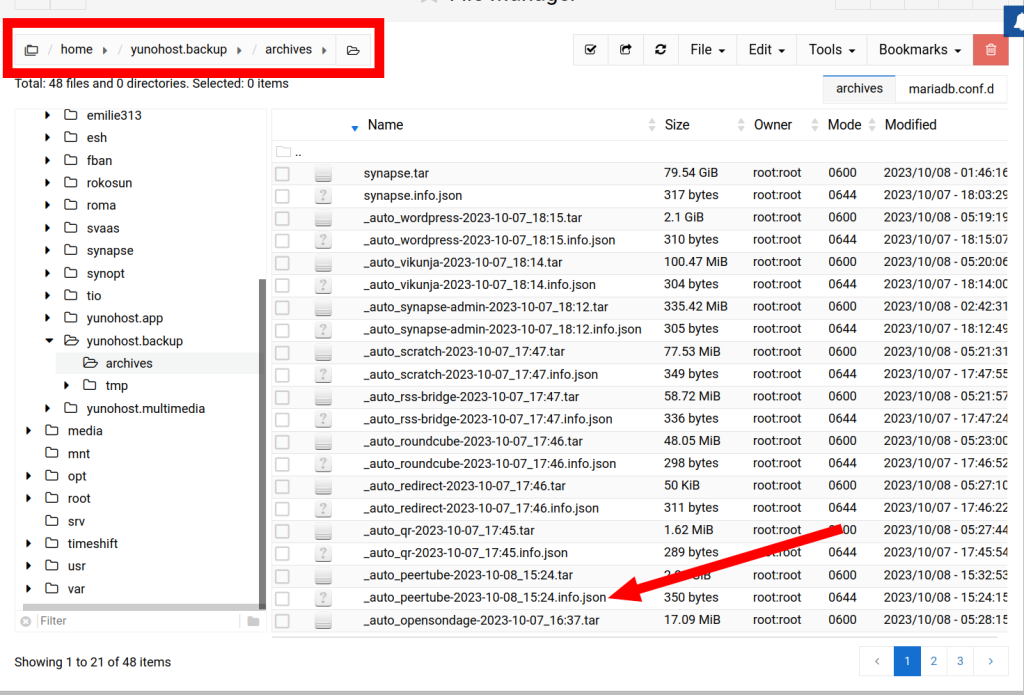

One small issue: YNH thinks that it has to restore the entire 800GB and if you do not have that much storage space it will fail. Got to your archive folder (I use Webmin):

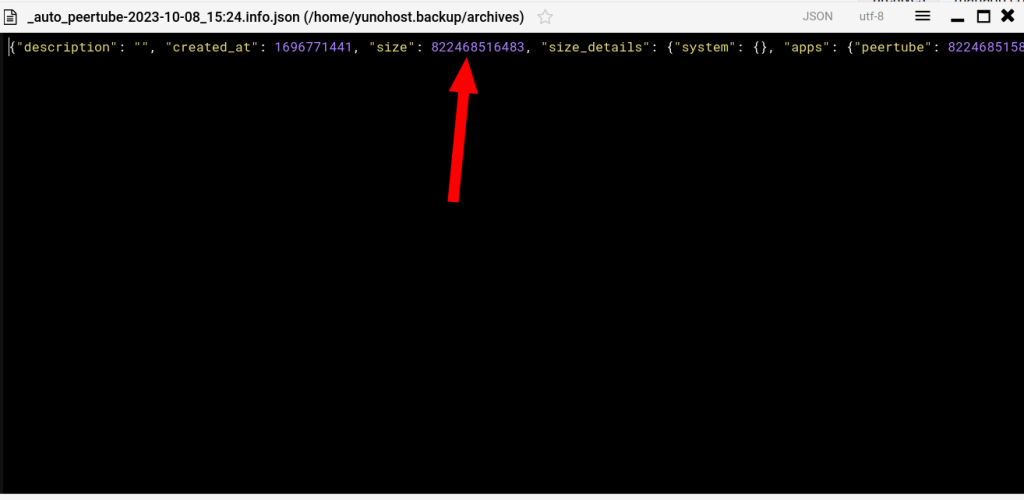

And edit the .json file associated with your backup archive. Modify this to whatever low number:

Then restore the normal way via the YNH panel.

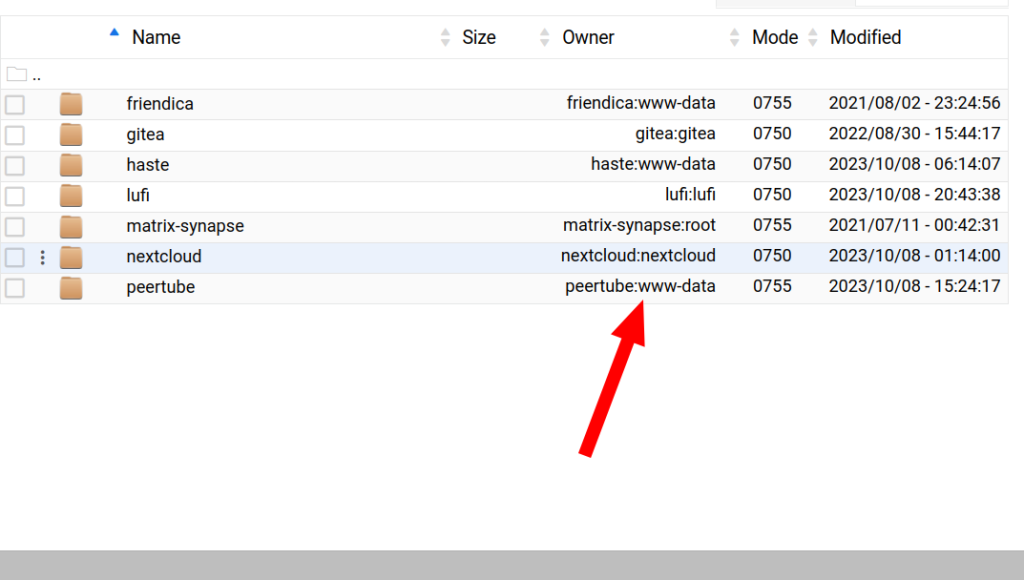

Another issue….at times the ownership of the downloaded data is not correct. So for example for Peertube I had to change to peertube::www-data:

But that’s basically it for an app that has a small database and archive overall, but huge amounts of data.

If you are dealing with a huge database restore manually via the command line (SSH) with yunohost backup restore _auto_friendica-2023-10-08_22:26 --app friendica

That’s the main difference here for big backups. I wish they could all be done via that YNH panel….but seems unreliable for such massive archives…

Overall if it wasn’t for YNH I would give up. No TROM.tf for sure. YNH is brilliant I swear! Despite some hiccups here and there, it is amazing.

Conclusions and the future of trom.tf

I was forced to move to new hosting services and that was good because now I know that just with our Borg backups I can move anywhere in a matter of days. I also chose 2 different companies this time so to not put all eggs into one basket.

One thing we will need in the future for TROM.tf is diskspace. Everything else is more than enough. I tried to use Peertube with an Object Storage that you can always scale up but was not reliable. To me is so much easier and reliable to have the data stored on the same server.

Luckily with Hetzner we can add 2 more physical drives to our dedicated server. Here is a list of prices. Now we have enough, despite Timeshift’s backups taking up some 500GB. So we still have some 1.5TB to spare. Enough for 2 or so years I guess. And if we ever need we can add another 2TB for 17 Euros more a month. Or move to HDDs for storage and add up to 16TB or more for quite cheap.

Therefore I am double happy. One to move from Cuntabo, and second to be able to scale up TROM.tf and make it reliable for the years to come!!

Quick note: Hetzner so far did not ask me to send them a photo with my passport and my face, like the crazy Webdock people did….so far….will see…